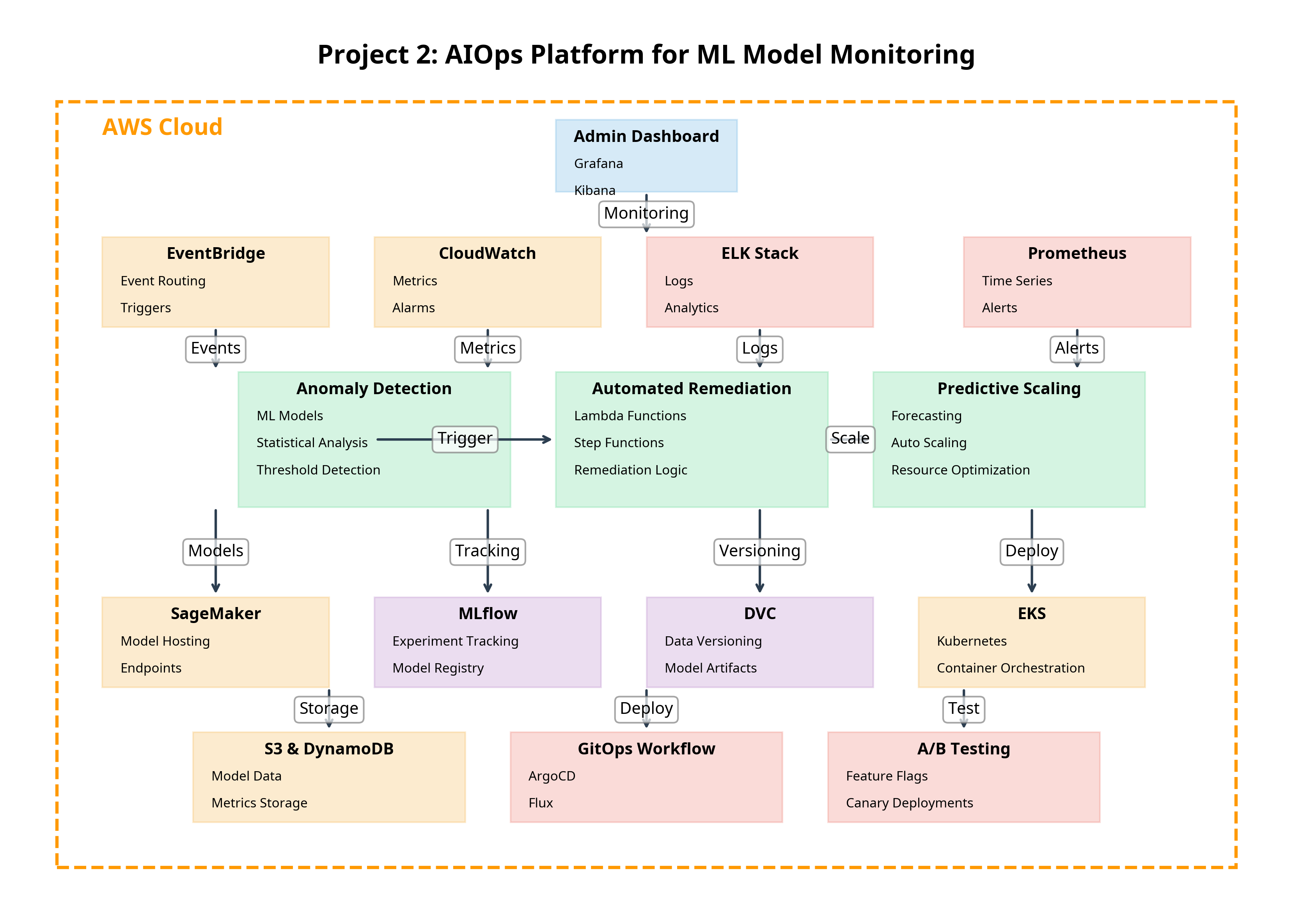

Architecture

Architecture Components

- Model Performance Monitor: Anomaly detection system

- CloudWatch: Metrics collection and storage

- EventBridge: Event-driven orchestration

- Lambda Functions: Remediation actions

- Step Functions: Complex remediation workflows

- MLflow Tracking Server: Experiment tracking

- DVC: Model and data versioning

- S3: Artifact storage

- SageMaker: Model hosting and deployment

- DynamoDB: Baseline storage and configuration

- SNS: Notifications and alerts